The Rise of DeepSeek: Why It

DeepSeek Explained: The Innovation Everyone

It took about a month for the finance world to start freaking out about DeepSeek, but when it did, it took more than half a trillion dollars — or one entire Stargate — off Nvidia’s market cap. It wasn’t just Nvidia, either: Tesla, Google, Amazon, and Microsoft tanked.

Did you know? You can comment on this post! Just scroll down

DeepSeek’s two AI models, released in quick succession, put it on par with the best available from American labs, according to Alexandr Wang, Scale AI CEO. And DeepSeek seems to be working within constraints that mean it trained much more cheaply than its American peers. One of its recent models is said to cost just $5.6 million in the final training run, which is about the salary an American AI expert can command. Last year, Anthropic CEO Dario Amodei said the cost of training models ranged from $100 million to $1 billion. OpenAI’s GPT-4 cost more than $100 million, according to CEO Sam Altman. DeepSeek seems to have just upended our idea of how much AI costs, with potentially enormous implications across the industry.

This has all happened over just a few weeks. On Christmas Day, DeepSeek released a reasoning model (v3) that caused a lot of buzz. Its second model, R1, released last week, has been called “one of the most amazing and impressive breakthroughs I’ve ever seen” by Marc Andreessen, VC and adviser to President Donald Trump. The advances from DeepSeek’s models show that “the AI race will be very competitive,” says Trump’s AI and crypto czar David Sacks. Both models are partially open source, minus the training data.

DeepSeek’s successes call into question whether billions of dollars in compute are actually required to win the AI race. The conventional wisdom has been that big tech will dominate AI simply because it has the spare cash to chase advances. Now, it looks like big tech has simply been lighting money on fire. Figuring out how much the models actually cost is a little tricky because, as Scale AI’s Wang points out, DeepSeek may not be able to speak honestly about what kind and how many GPUs it has — as the result of sanctions.

Even if critics are correct and DeepSeek isn’t being truthful about what GPUs it has on hand (napkin math suggests the optimization techniques used means they are being truthful), it won’t take long for the open-source community to find out, according to Hugging Face’s head of research, Leandro von Werra. His team started working over the weekend to replicate and open-source the R1 recipe, and once researchers can create their own version of the model, “we’re going to find out pretty quickly if numbers add up.”

What is DeepSeek?

Led by CEO Liang Wenfeng, the two-year-old DeepSeek is China’s premier AI startup. It spun out from a hedge fund founded by engineers from Zhejiang University and is focused on “potentially game-changing architectural and algorithmic innovations” to build artificial general intelligence (AGI) — or at least, that’s what Liang says. Unlike OpenAI, it also claims to be profitable.

Led by CEO Liang Wenfeng, the two-year-old DeepSeek is China’s premier AI startup. It spun out from a hedge fund founded by engineers from Zhejiang University and is focused on “potentially game-changing architectural and algorithmic innovations” to build artificial general intelligence (AGI) — or at least, that’s what Liang says. Unlike OpenAI, it also claims to be profitable.

In 2021, Liang started buying thousands of Nvidia GPUs (just before the US put sanctions on chips) and launched DeepSeek in 2023 with the goal to “explore the essence of AGI,” or AI that’s as intelligent as humans. Liang follows a lot of the same lofty talking points as OpenAI CEO Altman and other industry leaders. “Our destination is AGI,” Liang said in an interview, “which means we need to study new model structures to realize stronger model capability with limited resources.”

So, that’s exactly what DeepSeek did. With a few innovative technical approaches that allowed its model to run more efficiently, the team claims its final training run for R1 cost $5.6 million. That’s a 95 percent cost reduction from OpenAI’s o1. Instead of starting from scratch, DeepSeek built its AI by using existing open-source models as a starting point — specifically, researchers used Meta’s Llama model as a foundation. While the company’s training data mix isn’t disclosed, DeepSeek did mention it used synthetic data, or artificially generated information (which might become more important as AI labs seem to hit a data wall).

Without the training data, it isn’t exactly clear how much of a “copy” this is of o1 — did DeepSeek use o1 to train R1? Around the time that the first paper was released in December, Altman posted that “it is (relatively) easy to copy something that you know works” and “it is extremely hard to do something new, risky, and difficult when you don’t know if it will work.” So the claim is that DeepSeek isn’t going to create new frontier models; it’s simply going to replicate old models. OpenAI investor Joshua Kushner also seemed to say that DeepSeek “was trained off of leading US frontier models.”

R1 used two key optimization tricks, former OpenAI policy researcher Miles Brundage told The Verge: more efficient pre-training and reinforcement learning on chain-of-thought reasoning. DeepSeek found smarter ways to use cheaper GPUs to train its AI, and part of what helped was using a new-ish technique for requiring the AI to “think” step by step through problems using trial and error (reinforcement learning) instead of copying humans. This combination allowed the model to achieve o1-level performance while using way less computing power and money.

“DeepSeek v3 and also DeepSeek v2 before that are basically the same sort of models as GPT-4, but just with more clever engineering tricks to get more bang for their buck in terms of GPUs,” Brundage said.

To be clear, other labs employ these techniques (DeepSeek used “mixture of experts,” which only activates parts of the model for certain queries. GPT-4 did that, too). The DeepSeek version innovated on this concept by creating more finely tuned expert categories and developing a more efficient way for them to communicate, which made the training process itself more efficient. The DeepSeek team also developed something called DeepSeekMLA (Multi-Head Latent Attention), which dramatically reduced the memory required to run AI models by compressing how the model stores and retrieves information.

What is shocking the world isn’t just the architecture that led to these models but the fact that it was able to so rapidly replicate OpenAI’s achievements within months, rather than the year-plus gap typically seen between major AI advances, Brundage added.

OpenAI positioned itself as uniquely capable of building advanced AI, and this public image just won the support of investors to build the world’s biggest AI data center infrastructure. But DeepSeek’s quick replication shows that technical advantages don’t last long — even when companies try to keep their methods secret.

“These close sourced companies, to some degree, they obviously live off people thinking they’re doing the greatest things and that’s how they can maintain their valuation. And maybe they overhyped a little bit to raise more money or build more projects,” von Werra says. “Whether they overclaimed what they have internally, nobody knows, obviously it’s to their advantage.”

Money talk

The investment community has been delusionally bullish on AI for some time now — pretty much since OpenAI released ChatGPT in 2022. The question has been less whether we are in an AI bubble and more, “Are bubbles actually good?” (“Bubbles get an unfairly negative connotation,” wrote DeepWater Asset Management, in 2023.)

The investment community has been delusionally bullish on AI for some time now — pretty much since OpenAI released ChatGPT in 2022. The question has been less whether we are in an AI bubble and more, “Are bubbles actually good?” (“Bubbles get an unfairly negative connotation,” wrote DeepWater Asset Management, in 2023.)

It’s not clear that investors understand how AI works, but they nonetheless expect it to provide, at minimum, broad cost savings. Two-thirds of investors surveyed by PwC expect productivity gains from generative AI, and a similar number expect an increase in profits as well, according to a December 2024 report.

The public company that has benefited most from the hype cycle has been Nvidia, which makes the sophisticated chips AI companies use. The idea has been that, in the AI gold rush, buying Nvidia stock was investing in the company that was making the shovels. No matter who came out dominant in the AI race, they’d need a stockpile of Nvidia’s chips to run the models. On December 27th, the shares closed at $137.01 — almost 10 times what Nvidia stock was worth at the beginning of January 2023.

DeepSeek’s success upends the investment theory that drove Nvidia to sky-high prices. If the company is indeed using chips more efficiently — rather than simply buying more chips — other companies will start doing the same. That may mean less of a market for Nvidia’s most advanced chips, as companies try to cut their spending.

“Nvidia’s growth expectations were definitely a little ‘optimistic’ so I see this as a necessary reaction,” says Naveen Rao, Databricks VP of AI. “The current revenue that Nvidia makes is not likely under threat; but the massive growth experienced over the last couple of years is.”

Nvidia wasn’t the only company that was boosted by this investment thesis. The Magnificent Seven — Nvidia, Meta, Amazon, Tesla, Apple, Microsoft, and Alphabet — outperformed the rest of the market in 2023, inflating in value by 75 percent. They continued this staggering bull run in 2024, with every company except Microsoft outperforming the S&P 500 index. Of these, only Apple and Meta were untouched by the DeepSeek-related rout.

The craze hasn’t been limited to the public markets. Startups such as OpenAI and Anthropic have also hit dizzying valuations — $157 billion and $60 billion, respectively — as VCs have dumped money into the sector. Profitability hasn’t been as much of a concern. OpenAI expected to lose $5 billion in 2024, even though it estimated revenue of $3.7 billion.

DeepSeek’s success suggests that just splashing out a ton of money isn’t as protective as many companies and investors thought. It hints small startups can be much more competitive with the behemoths — even disrupting the known leaders through technical innovation. So while it’s been bad news for the big boys, it might be good news for small AI startups, particularly since its models are open source.

Just as the bull run was at least partly psychological, the sell-off may be, too. Hugging Face’s von Werra argues that a cheaper training model won’t actually reduce GPU demand. “If you can build a super strong model at a smaller scale, why wouldn’t you again scale it up?” he asks. “The natural thing that you do is you figure out how to do something cheaper, why not scale it up and build a more expensive version that’s even better.”

Optimization as a necessity

But DeepSeek isn’t just rattling the investment landscape — it’s also a clear shot across the US’s bow by China. The advances made by the DeepSeek models suggest that China can catch up easily to the US’s state-of-the-art tech, even with export controls in place.

But DeepSeek isn’t just rattling the investment landscape — it’s also a clear shot across the US’s bow by China. The advances made by the DeepSeek models suggest that China can catch up easily to the US’s state-of-the-art tech, even with export controls in place.

The export controls on state-of-the-art chips, which began in earnest in October 2023, are relatively new, and their full effect has not yet been felt, according to RAND expert Lennart Heim and Sihao Huang, a PhD candidate at Oxford who specializes in industrial policy.

The US and China are taking opposite approaches. While China’s DeepSeek shows you can innovate through optimization despite limited compute, the US is betting big on raw power — as seen in Altman’s $500 billion Stargate project with Trump.

“Reasoning models like DeepSeek’s R1 require a lot of GPUs to use, as shown by DeepSeek quickly running into trouble in serving more users with their app,” Brundage said. “Given this and the fact that scaling up reinforcement learning will make DeepSeek’s models even stronger than they already are, it’s more important than ever for the US to have effective export controls on GPUs.”

DeepSeek’s chatbot has surged past ChatGPT in app store rankings, but it comes with serious caveats. Startups in China are required to submit a data set of 5,000 to 10,000 questions that the model will decline to answer, roughly half of which relate to political ideology and criticism of the Communist Party, The Wall Street Journal reported. The app blocks discussion of sensitive topics like Taiwan’s democracy and Tiananmen Square, while user data flows to servers in China — raising both censorship and privacy concerns.

There are some people who are skeptical that DeepSeek’s achievements were done in the way described. “We question the notion that its feats were done without the use of advanced GPUs to fine tune it and/or build the underlying LLMs the final model is based on,” says Citi analyst Atif Malik in a research note. “It seems categorically false that ‘China duplicated OpenAI for $5M’ and we don’t think it really bears further discussion,” says Bernstein analyst Stacy Rasgon in her own note.

For others, it feels like the export controls backfired: instead of slowing China down, they forced innovation. While the US restricted access to advanced chips, Chinese companies like DeepSeek and Alibaba’s Qwen found creative workarounds — optimizing training techniques and leveraging open-source technology while developing their own chips.

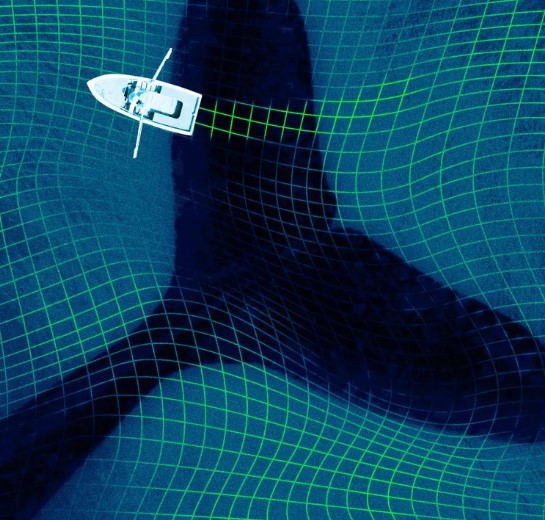

Doubtless someone will want to know what this means for AGI, which is understood by the savviest AI experts as a pie-in-the-sky pitch meant to woo capital. (In December, OpenAI’s Altman notably lowered the bar for what counted as AGI from something that could “elevate humanity” to something that will “matter much less” than people think.) Because AI superintelligence is still pretty much just imaginative, it’s hard to know whether it’s even possible — much less something DeepSeek has made a reasonable step toward. In this sense, the whale logo checks out; this is an industry full of Ahabs. The end game on AI is still anyone’s guess.

The future AI leaders asked for

AI has been a story of excess: data centers consuming energy on the scale of small countries, billion-dollar training runs, and a narrative that only tech giants could play this game. For many, it feels like DeepSeek just blew that idea apart.

AI has been a story of excess: data centers consuming energy on the scale of small countries, billion-dollar training runs, and a narrative that only tech giants could play this game. For many, it feels like DeepSeek just blew that idea apart.

While it might seem that models like DeepSeek, by reducing training costs, can solve environmentally ruinous AI — it isn’t that simple, unfortunately. Both Brundage and von Werra agree that more efficient resources mean companies are likely to use even more compute to get better models. Von Werra also says this means smaller startups and researchers will be able to more easily access the best models, so the need for compute will only rise.

DeepSeek’s use of synthetic data isn’t revolutionary, either, though it does show that it’s possible for AI labs to create something useful without robbing the entire internet. But that damage has already been done; there is only one internet, and it has already trained models that will be foundational to the next generation. Synthetic data isn’t a complete solution to finding more training data, but it’s a promising approach.

The most important thing DeepSeek did was simply: be cheaper. You don’t have to be technically inclined to understand that powerful AI tools might soon be much more affordable. AI leaders have promised that progress is going to happen quickly. One possible change may be that someone can now make frontier models in their garage.

The race for AGI is largely imaginary. Money, however, is real enough. DeepSeek has commandingly demonstrated that money alone isn’t what puts a company at the top of the field. The longer-term implications for that may reshape the AI industry as we know it.

Article Posted 4 Months ago. You can post your own articles and it will be published for free.

No Registration is required! But we review before publishing! Click here to get started

One Favour Please! Subscribe To Our YouTube Channel!

468k

Cook Amazing Nigerian Dishes, Follow Adorable Kitchen YouTube Channel!

1.1m

Like us on Facebook, Follow on Twitter

React and Comment

Click Here To Hide More Posts Like This

Watch and Download Free Mobile Movies, Read entertainment news and reports, Download music and Upload your own For FREE.

Submit Your Content to be published for you FREE! We thrive on user-submitted content!

But we moderate!